If You Are Rethinking Your Ethics – In Line With Developments In Digitalisation, Artificial Intelligence, Superintelligence And Singularity

Stuart D.G. Robinson

24. Dezember 2017

A Synopsis of Stuart Robinson’s Article about Ethicsby Alan Ettlin In this sequel to Stuart Robinson’s article on visions, he goes two levels beyond where discourse on digitalisation, artificial intelligence, superintelligence, singularity and crucially, ethics is currently being led. Stuart does not address the ethical questions surrounding the programming of emergent intelligence including the ensuing dilemmas. Instead, he chooses to illuminate the ethical premises which underlie the motives of the predominant forces and figures behind the contemporary technological developments which are impacting on humanity with unprecedented velocity and magnitude – without even asking humanity for permission. In a nutshell, Stuart addresses the question as to whether we have put the cart before the horse: have we neglected to address ethical singularity before pursuing technological singularity with such might? Stuart illustrates how the key driving forces themselves are contained within ethical systems which have not changed since the Middle Ages. Such ethical systems are described by Stuart as being anthropocentric and each based on such circular1, self-perpetuating and self-legitimising reasoning that not only their further development but also any meaningful dialogue between the systems is precluded. In a central section of the article, Stuart expounds the possibility that the forces behind contemporary developments in AI may, wittingly or unwittingly, be trapping humanity within their respective ethical systems for yet another aeon to come. Furthermore, using examples of developments emerging from the West Coast of the US, Stuart illustrates what might be described by some as a disturbing similarity between the ethical systems pertaining there and Nietzschean ethics. In this respect, he refers not only to Nietzschean concepts such as the super-human, but even more pertinently to the self-inflicted exclusion of those who do not follow. In a surprising turn, Stuart proposes that the future development of AI could indeed offer humanity – and therefore the leaders, supervisory and executive boards of organisations around the world – the opportunity to escape this “Singularity Comedy” and finally capture what Dante was attempting to show us in his Divine Comedy seven hundred years ago. 1. i.e. that by their sheer existence fulfil the necessary and sufficient conditions for their existence. |

If You Are Rethinking Your Ethics

in line with developments in digitalisation, artificial intelligence, superintelligence and singularity

In this article, subtitled ‘The Ethical Singularity Comedy’, we will be exploring two central questions which also serve as the key sections of the text:

Which ethical premises underlie predominant forces behind the development of digitalisation, artificial intelligence, superintelligence and singularity?

What challenges to current thinking and ethics could the supervisory and executive boards of organisations around the world feel obliged to address and resolve at the level of corporate ethics before it is too late?

The Ethical Singularity Comedy

Table of Contents

Introduction

Section 1 Which ethical premises underlie predominant forces behind the development of digitalisation, artificial intelligence, superintelligence and singularity?

1.1 Technological singularity prior to ethical singularity: Have we put the cart before the horse?

1.2 Digital transformation and ethical stagnation: Why is the argumentation of ethical legitimacy still circular?

1.3 Unde venis et quo vadis, contemporary ethics

1.4 Ethical integrity: Why have we invented such an impediment to ethical transformation?

1.5 Uninventing ethics: Is the development of AI and SI challenging ethical integrity?

1.6 Ethical transformation: Lying in wait for centuries until the advent of AI?

Section 2 What challenges to current thinking and ethics could the supervisory and executive boards of organisations around the world feel obliged to address and resolve at the level of corporate ethics before it is too late?

2.1 The Challenge of Legitimacy: Corporate Ethicality Questions

2.2 The Challenge of Multi-Ethicality: Singularity Tragedy or Singularity Comedy?

2.3 The Challenge of Ethical Transformation: Corporate Options and Corporate Questions

2.4 The Challenge of Ethical Foresight and Accountability: Transformational Contingency

Concluding Reflections

Introduction

According to Joseph Carvalko, who is Professor of Law, Science and Technology at Quinnipiac University of Law,

In a similar vein, David Pearce, co-founder of what was originally named the World Transhumanist Association, writes:

Citing from an interview with David Hanson, founder of Hanson Robotics based in Hong Kong, John Thornhill writes in the Financial Times:

He adds: “I want robots to learn to love and what it means to be loved and not just love in the small sense. Yes, we want robots capable of friendship and familial love, of this kind of bonding.”

“However, we also want robots to love in a bigger sense, in the sense of the Greek word agape, which means higher love, to learn to value information, social relationships, humanity.”

Mr Hanson argues a profound shift will happen when machines begin to understand the consequences of their actions and invent solutions to their everyday challenges. “When machines can reason this way then they can begin to perform acts of moral imagination. And this is somewhat speculative but I believe that it’s coming within our lifetimes,” he says. (Thornhill, 2017)

Projections such as these trigger fundamental thoughts about the human condition which we will go on to explore below. The idea of autonomous humanoids and biosynthetically engineered humans also raises significant questions about the legitimacy and responsibility-ownership of their actions and suggests the need for, or the advent of, paradigmatic changes in the fields of law and ethics, e.g.

- Will ‘human rights’ have to be modified to rights for all forms of ‘autonomous intelligence’ – and, if so, how should the latter be defined?

- How should vulnerable groups be protected by law from the dangers of entering into affectional connections with robots, including sex robots?

- Will a specific legal status need to be created for autonomous robots as having the status of ‘electronic persons’?

- Will judges and juries have to sit in front of an AI artefact and justify their decisions to it before – or even after – sentence is passed on a human-being?

More fundamental still are questions in the field of ethical premises such as

- Which or what type of institution or intelligence is legitimised – or legitimises itself – to pose and answer the above questions, and based on what premises of legitimisation? – or will ‘legitimacy’ become an obsolete concept and, if so, what will emerge around the space which it currently occupies?

- How can responsibility for value-creation or value-damage be assigned in situations where ‘emergent’ behaviour through what humans presume to have been collusion between e.g. an unquantifiable number of AI-artefacts has arisen, i.e. behaviour which could be proven to have been unpredictable for any of the programming bodies, even if the colluding artefacts could be identified?

- Also, if ‘slight contacts’ (Chodat, 2008) (p. 25) with other human or non-human minds can be identified as the veritable sources of value-creation or value-damage, how should ownership or perpetration be assigned?

- In other words, how will the space evolve which is currently occupied by concepts such as ‘agency’, ‘ownership’, ‘perpetrator’ and ‘value’?

As remarked by Amartya Sen in ‘The Idea of Justice’:

or, returning to the field of human morals:

- Should we, certain humans or organisations in a subset of human society, consider undertaking the creation of humanoids to whom we, or others, would be – or might feel – morally committed before we provide adequate care for the millions of co-humans who are suffering to the point of death from the lack of basic requirements such as food, water and shelter?

- Will the financiers of developments in artificial intelligence (henceforth ‘AI’) and superhuman intelligence (henceforth ‘SI’) be made answerable and liable by their own artefacts for their provenly immoral allocation of resources and profits – which they generated through exploiting the data of their consumers?

Putting the last questions temporarily to one side, we note that the Civil Law Rules on Robotics of the European Union includes the commissioning of a ‘Charter on Robotics’ which should not only address ethical compliance standards for researchers, practitioners, users and designers, but also provide procedures for resolving any ethical dilemmas which arise (European Parliament, 2017). We will return to issues surrounding the content of the Civil Law Rules on Robotics in the second section of this paper. At this point, we note that the wording of the document makes it apparent that the European Parliament has recognised that the development of AI is leading jurisprudence and ethics into uncharted territory at a very high speed; not only this, and crucially for the focus of this paper, the chosen wording shows attentiveness to the consideration that the European Union cannot allow the legal regulations and societal ethics contained in its Charter of Fundamental Rights (European Union, 2017) to put its members’ national economies at a disadvantage in the global race towards digital supremacy. In other words, ‘business ethics’ may factually override ‘societal ethics’ in determining the veritable ethics of the development of digitalisation, AI, machine intelligence and machine learning (both of which we will henceforth subsume for the purposes of this paper under ‘AI’) and also of SI. For a more detailed treatise of the concept of ‘business ethics’ – including the ‘whole ethical package’ within which business-managers factually function – and the position of ‘ethical neutrality’ which underlies the writing of this article, the reader is referred to a previous paper entitled ‘Interethical Competence’. (Robinson, 2014)

In an article published by Deloitte University Press, estimates for worldwide spending on cognitive systems are cited to reach $31 billion by 2019, and the size of the digital universe is estimated to double each year to reach 44 zettabytes by 2020 (Mittal et al., 2017). Even if these estimates turn out to be only partially accurate, the facts are that the world economy and society in general are undergoing change of unprecedented dimensions and that only a minority of bodies have any significant measure of influence and control over these evolutionary changes. As one example, national economies now find themselves left with no choice but to ensure that the infrastructure and expertise is in place to ensure that their population can sustainably enjoy ubiquitous connectivity of the most up-to-date technical standards. Another example lies in the fact that India and China have gained a key competitive advantage by currently graduating at least 15 engineers for each one who graduates in the US; in 2020, the European Union will lack an estimated 825’000 professionals with adequate digital skills to avert global economic insignificance for the Union (European Parliament, 2017).

As we will discuss in more detail in Section 1 of this paper, economic motives, competition and various forms of aspiration towards supremacy lie at the core of contemporary AI-development-ethics. Assuming that business and other forms of ethics do, and will, factually override societal ethics, there will be a faster and more fundamental impact on the way in which most businesses are run than is generally anticipated.

According to former Professor of History at Indiana University, Jon Kofas:

A further set of motives and competition underlying contemporary digital developments is of a politically and ideologically hegemonial nature. Increasingly, the opinion is being voiced that AI is turning into the next global arms race, i.e. the race for supreme control and the protection of sovereignty so as, at least, not to be controlled by others. This means that the ‘ethics of global supremacy’ could factually override both business ethics and societal ethics – that is, for as long as ‘artificial ethics’ or superintelligence do not factually take the upper hand.

In an open letter to the United Nations on 21st August 2017, with one hundred and seventeen notable C-level signatories, the Future of Life Institute declares that it:

and warns:

In a BBC Interview, Professor Stephen Hawking makes reference to the darker side of influence, control and hegemony, where further codes of ethics are active:

In relation to an intervention into the workings of the dark web by the Federal Bureau of Investigation, the US Drug Enforcement Agency, the Dutch National Police and Europol, Dimitris Avramopoulos, European Commissioner for Migration, Home Affairs and Citizenship, remarks:

Out of the reach of public accessibility are also the exact developments which are being made in the field of genetics, including major projects to discover the genetic basis of human intelligence in institutions such as the Cognitive Research Laboratory of the Beijing Genomics Institute. The little information which is indeed publicly available can leave us wondering about the extent to which national or racial eugenic motives and policies might be involved or could indeed already be shaping which super-species will one day be posing and answering questions such as those laid out above, i.e. not artificial ethics or superintelligence, but ‘super-species intelligence’ or other forms of phenomena which have not yet emerged.

If the ethics of global supremacy and power are indeed an inevitable motive behind the exploitation of contemporary exponential global economic and technological developments, if financial and intellectual resources do indeed remain key elements of this form of power and if the ethical super-dice do thereby remain factually unturned from the 20th and far into the 21st Century, then the consequences of inequalities of influence will foreseeably increase in similarly exponential dimensions both internationally and intranationally. Moreover, if bodies such as the United Nations continue to try to promote the mono-ethical stance of democracy and equal opportunity in a universalistic manner, if e-democracy continues to be used to promote democratic behaviour and to impact on electoral processes and referenda on a global scale, if cryptocurrencies continue to be used for anarcho-capitalist motives, if organised crime, cybercrime and commercially disruptive technology continue to undermine the activities of both business and politics at home and around the globe, then it is likely that international strife will not be lessened, but exacerbated even further than it is today – as we see in widely differing geopolitical issues involving nation-states such as North Korea, Iran, Myanmar, Syria, Iraq, Afghanistan, Ukraine and Yemen.

Stephen Hawking goes even further: he warns us of dangers which extend beyond the tensions of international criminal, racial and ideological supremacy:

As noted by John Thornhill writing in the Financial Times, the phenomenon of AI-artefacts generating dangerous information, political statements and actions which are unpredictable and incomprehensible for their architects, so-called ‘emergent’ behaviour, is already a reality. (Thornhill, 2017)

Viewed in the light of these developments, it is not surprising that the prevalence of the use of the word ‘anxiety’ appears now to be peaking at a level not seen since the first half of the 19th Century. (Google, 2017a) Almost two centuries ago, European society was coming to terms with the consequences of the Industrial Revolution and empowering itself through what has been termed the ‘People’s Spring’. The latter was a fundamental political disruption which began in France and triggered the replacement of feudalism with democratic national states on a pan-European scale. It was at this historical juncture that significant precursors were laid for modernist societal ethics in Europe, as in the works of Charles Darwin (1809-1882), Karl Marx (1818-1883) and, not least, Friedrich Nietzsche (1844-1900), a philosopher whose influence on 20th and 21st century ethics in the East and West we will discuss below in Section 1.

The parallels between what was taking place in the first half of the 19th Century and contemporary, closely interwoven developments in technology, economics, politics, ethics and society at-large are self-evident. Just as then, manual work and skills are currently being replaced by technology. This is now happening on such a grand scale and with such speed that, for example, what just a few years ago was regarded in India, Pakistan and Bangladesh as a ‘demographic dividend’ has metamorphosed into a ‘demographic nightmare’. In these three countries together, around 27 million people currently work in the clothes manufacturing industry for some of the lowest wages in the world. This region of the world alone is expected to bring another 240 million low-wage manual workers to the labour market over the next 20 years; but within 10 years, due to developments in robotics, clothes manufacturing is likely to be fully relocated to the countries where the clothing is needed, thus creating massive unemployment in South Asia (Financial Times, 2017): that is, unless these economies possess the resources to take the further development of digitalisation, AI and SI out of the hands of their current owners or unless the ethical super-dice are re-thrown and there is a fundamental change in global ethics.

In Section 1 of the paper, we will examine contemporary ethics in more detail with a view to illuminating, in Section 2, some of the major challenges to current premises which underlie corporate visions, strategies, cultures and ethics. Resolving these challenges will include addressing the matter of personal and collective accountability for the ‘ethical footprint’ (Robinson, 2014) which senior managers and their organisations leave behind.

For reasons of focus and relevance, the following discussion will not primarily address issues such as how robots can be programmed top-down with humanitarian law, i.e. to behave in an exemplary manner according to human ethical standards. Such matters are handled by numerous scientists and authors including Alan Winfield, who is Professor of Robot Ethics at the University of the West of England. Instead, we will investigate the nature of the ethical premises which underlie contemporary mainstream commercial and engineering developments in digital technology, taking information from the Singularity University which is based in the Silicon Valley area of the USA as a concrete example; we will also focus on the deep-reaching influence on contemporary ethics of the works of one philosopher in particular, Friedrich Nietzsche, again as a specific and relevant example – and with no intention to discount the influence of other philosophers such as Immanuel Kant (whom we shall briefly mention below), Bertrand Russell, Peter Strawson, John Dewey or others. In focussing on the works of Friedrich Nietzsche, we will also draw specifically on the significant ethical legacies of Homer, Dante Alighieri, Giacomo Leopardi and Emil Cioran.

As the article progresses, we hope to illuminate why, in an article published in Forbes and entitled ‘The Forces Driving Democratic Recession’, Jay Ogilvy cites the fears of Francis Fukuyama that the global democratic recession may turn into a global democratic depression and then writes the following about one of the founders of the Singularity University, also Director of Engineering at Google:

We will also shed light on why Alan Winfield, in his widely recognised status as a robot ethicist, as mentioned above, feels compelled to state the following in a BBC interview:

By examining the historical and contemporary ethical backcloth to statements such as these, we hope to contribute to the thought processes and decisions which will be made in the further development of AI and SI by scientists, software engineers, senior business managers and regulatory bodies.

In our Concluding Reflections, we will look to AI and SI as a source of inspiration for radical ethical transformation.

1. Which ethical premises underlie predominant forces behind the development of digitalisation, artificial intelligence, superintelligence and singularity?

1.1 Technological singularity prior to ethical singularity: Have we put the cart before the horse?

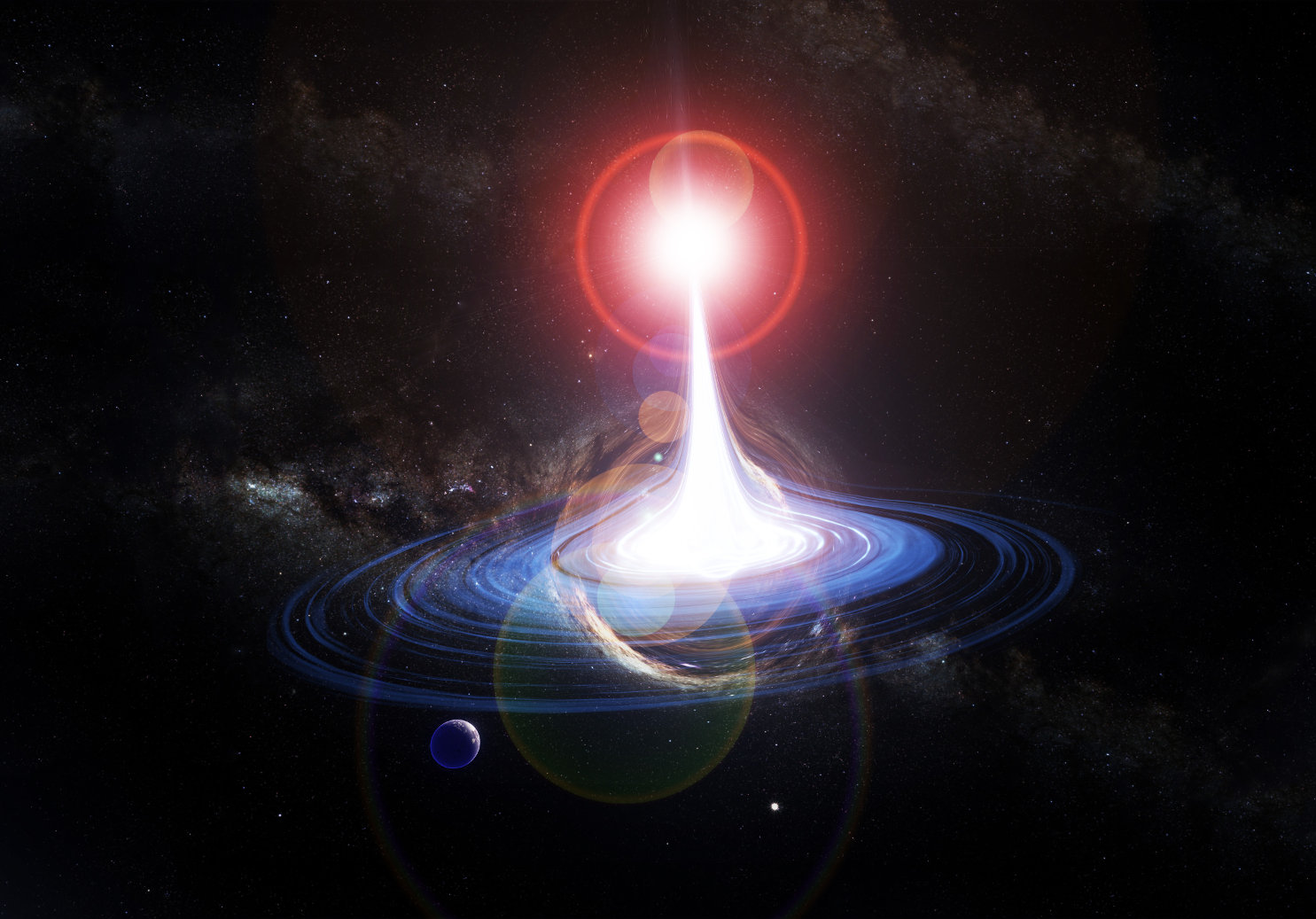

In this image (Britschgi, 2018), we see a graphical representation of the concept of singularity from the field of physics where it can be understood as follows:

The use of the term ‘singularity’ is to be found half a century earlier in relation to conversations which took place between the two mathematicians, Stanislaw Ulam and John von Neumann:

Today, we find that the definition of the term ‘technological singularity’ cited in Wikipedia includes the term ‘artificial superintelligence’ as follows:

When one enters into the website of the Singularity University (Singularity University, 2017), which is based in San Francisco, one is confronted with a vocabulary which – viewed in its totality – expresses the set of ethical premises which the website contributors and the founders of the Singularity University apparently share, or at least those which they find appropriate to communicate to the outside world. What is salient about the vocabulary and the premises which they express is that, from an ethical point of view, little, if anything, seems to have changed since the beginning of the 20th Century – which is possibly partly indicative of the inherent paradox in which AI-theorists and practitioners, as also philosophers, now find themselves: lacking concepts which are yet to be conceived and might even be inconceivable for the human species, one is compelled to use terms and concepts which will predictably one day become obsolete or, at most, ‘historically interesting items’.

The fact that nothing of major significance seems to have changed from an ethical point of view could, however, be indicative of something which we hypothesized above: namely that the ethical super-dice will factually remain unturned for centuries to come. The evolution of ethical-political history may indeed have reached an end, in one part of the world at least, with the advent of modernism, of liberal democracy and of life in general – including politics, health and education being driven materialistically by the market economy. This thesis was proposed by Francis Fukuyama in ‘The End of History and the Last Man’ (Fukuyama, 1992) and partly revised in his later works including ‘Our Posthuman Future, Consequences of the Biotechnology Revolution’ (Fukuyama, 2002). This leads us to examine the ethical super-dice hypothesis in more depth using the example of the Singularity University’s website. At the time of writing, ethically salient vocabulary and phrasing include,

These and very similar phrases were used with high frequency by the majority of key speakers at the Singularity University’s Global Summit 2017 (Singularity University, 2017) in San Francisco:

In terms of ethical premises, these declarations and affirmations can be understood to imply

- that the human condition is – or should be – a state of physical and psychological autonomy whereby human-beings

- assume self-responsibility,

- create and select their own values and priorities and

- act in accordance with their self-assumed self-responsibility, values and priorities.

The main body of statements made at the 2017 Singularity University Global Summit (henceforth SU-GS) arguably implied the related core ethical premise, i.e.

- that there is an imperative for assuming a self-legitimising, proactive form of self-determination and human will in order to create and sustain individual and collective mindsets of existential certainty.

Taken together, these ethical premises presuppose that human-beings possess a faculty for autonomy, for the creation and reception of imperatives – as also posited by Immanuel Kant with his concept of the ‘categorical imperative’ (Kant, 1871) – and for willing, doing and achieving. Importantly for the purposes of this paper, the cited affirmations can infer the self-legitimised creation and selection of ethical premises along the following lines:

- there is no higher ethical authority than the members of human societies who,

- in necessarily recognising not only self-ness but also other-ness within a given community/society,

- possess the faculty of ‘will’ to empower themselves and each other

- with the ethical imperative of attaining standards of thoughts, intentions and behaviour which,

- in being agreed in the community/society,

- means that the attainment of those standards acquires and deserves societal recognition, i.e. through being seen to be doing/thinking ‘the right thing’.

The above would imply that there ‘is’ no ethically pre-ordaining, judging, life-determining, omnipotent, theistic or other form of metaphysical authority or force who/which oversees human thoughts, intentions and behaviour. Consequently, nothing stands in the way of pursuing the self-defined standards which were proclaimed at the SU-GS and involved the advancement of digitalisation, SI and AI – such as

Whilst assuming a form of BEING which is based on some of the key premises upon which the worldviews of modernism and liberal democracy have been built – including atomistic reason, logic and empiricism as well as a universalistic aspiration for egalitarianism and self-determination – factually, of course, the speakers were making their declarations in a global context. Humanity comprises not one, but multiple worldviews whose respective constellations of key premises fundamentally differ. There are, for example, numerous contemporary societies which assume a form of BEING which is based on a pre-determined, theistically-based human condition in which one’s thoughts and acts are overseen and judged by a higher authority; the latter preordains the code of ethics by which human thoughts, intentions and behaviour are judged.

If we disregard for one moment the notion that only one worldview can be universally valid (in the sense that those who adhere to a different worldview to one’s own are regarded as ‘underdeveloped’ or ‘sacrilegious’ etc.), if we disregard the theoretical possibility of a global state let alone a global democracy (Sen, 2009) (p.408) and if we integrate the full implications of multi-ethicality into our view of the human condition, then the question raises itself as to the legitimacy of the ethical premises which underlie not merely the affirmations cited above, but also the whole field of the development of digitalisation, AI- and SI. Arguably, there should have been global agreement on these ethical premises before the latter developments were initiated.

1.2 Digital transformation and ethical stagnation: Why is the argumentation of ethical legitimacy still circular?

At this point in the discussion, we propose that implementable and sustainable answers to the question of legitimacy are required, perhaps urgently so, given the exponential, world-engulfing dimensions and aspirations of these developments and given that the premise of legitimacy (to which we will return below) is currently a crucial ingredient for the human condition and human ethics as many of us have grown to know them. However, finding solutions between differing ethical standpoints by means of verbal argumentation generally proves highly challenging, if not impossible, as the following example shows.

Whilst the ethical standpoints and behaviour of ‘A’ and ‘B’ are each intrinsically consistent , they are virtually impossible to reconcile with each other because of their respective circularity, the latter being a crucial ‘built-in’ feature for the sustainability of any ethical standpoint. Without tight circularity , a given ethical standpoint serves no purpose and has no ‘raison d’être’, rather like an undefined working hypothesis.

It is not surprising, therefore, that we find the phenomenon of circularity in the argumentation of legitimacy concerning innumerable ethical issues in society. Examples of never-ending ethical dispute include whaling, badger-culling, fox-hunting, genetic engineering, intensive farming, wind farms, ethnic homogeneity, population control, euthanasia, homosexuality, contraception, climate control, organic agriculture, foreign aid, nuclear weapons, land-mines, mineral resources, the publication of state secrets and free speech. Each standpoint has its own water-tight case for legitimacy as was the case with the violent events and demonstrations which took place at the beginning of August 2017 in Charlottesville, Virginia, and in Mountain View, California: some people were asserting their lawful right to free speech and others were asserting the lawful right to egalitarian treatment regardless of race, religion and gender. Professor Venkatraman of the University of Boston commented at the time that the main problem was the fact that meaningful dialogue between the ethically polarised factions was just not possible. (Venkatraman, 2017a)

In the case of digitalisation and AI, thousands of developers worldwide are legitimising themselves to pursue the objective of creating maximal levels of autonomy in their own image such as through proprioception (identifying and resolving internal needs such as the recharging of batteries), exteroception (adapting behaviour to external factors) and autonomous foraging (identifying and exploiting existentially critical resources). The levels of intelligence and self-sufficiency of their artefacts, including emergent, i.e. unpredicted and unpredictable behaviour and even disobedience to human instructions, have been increasing so rapidly that figures like Stephen Hawking, Nick Bostrom, Bill Gates and Elon Musk are now publicly urging for prudence, e.g. in the development of autonomous weaponry. However, their own self-legitimised argumentation seems largely ineffective and restricted to the raising of verbal warning fingers in the name of mankind, as is to be found in a BBC interview with Stephen Hawking. (BBC, 2014) Ardent opponents of digitalisation and AI also raise their own arguments, but genuine agreements are currently scarce.

Pre-empting the discussion in Section 2, the managers of organisations in the field of digitalisation around the world currently have fundamental decisions to make:

- Will they allow themselves to fall into ethical circularity concerning legitimacy, or not?

- Will they search for a way to avoid it, and, if so, how? Or will they choose to ignore it and proceed with their current set of ethical premises?

- Will they wait for others to point the way, e.g. in order not to lose their competitive advantage in the short- and mid-term, and then follow them?

However, all three decisions are clearly lacking in long-term adequacy. As mentioned in the Introduction, the responsibility for the ethical footprint of an organisation (Robinson, 2014) arguably lies within the personal and collective accountability of its senior managers. If the managers themselves and/or if third parties recognise this accountability, the decision which the managers make concerning ethical legitimacy constitutes the starting point for all positive and negative consequences for which they can be held accountable. In order to uphold this accountability, they have to identify the ethical standpoints from which their ethical footprints will be judged and somehow to overcome the circularity of the argumentation of legitimacy on all sides.

We propose that the latter can be achieved by firstly addressing the issue, not about which ethical principles should be applied and why, but about the extent to which ethics can be legitimised at all, by whom or by what. In other words, senior managers have the opportunity – and arguably the obligation – to radically question the phenomenon of ethical legitimacy per se which, in turn, requires that they adequately understand the phenomenon of ethics. This paper is intended to contribute not only to the deepening of that understanding but also to catalysing fundamental change in the field of ethics. To this end, we further propose that a particular perspective of ethical neutrality, which we will henceforth term ‘anethicality’ – since this perspective lies outside the confines of merely human ethics – and a linkable perspective of ‘a-certainty’, which, by definition, lies outside the paradigm of certainty and uncertainty, could bring radical movement into the fields of humanity’s self-understanding, of its future in an increasingly digitalised world and of its footprinting on the planet Earth.

It is arguable that a greater quality and quantity of resources should be allocated into achieving this radical movement than is being invested annually into the whole field of digitalisation: it is arguably regrettable that AI-development is creating a need for the development of human ethics and not vice versa. One could ask if it would not be significantly more beneficial to humanity and other sentient beings if human ethical development would take place sooner and faster than AI development.

1.3 Unde venis et quo vadis, contemporary ethics

Before illuminating the above reflections in more depth and addressing the potential space which anethicality and a-certainty might have to offer, we will examine the nature of the ethics underlying predominant developments in digitalisation and AI as well as the challenges which the increasingly digitalised world could have in store for private and corporate life if the field of ethics remains stagnant. Significantly, we will be referring to the enduring attractiveness of the ethical legacy of Friedrich Nietzsche which, it has often been claimed, was strongly influential on ideological developments which led to the First and Second World Wars. In tracing the roots of Nietzscheanism back as far as the works of Homer, Dante Alighieri and Giacomo Leopardi we aim to contribute to a deeper understanding of where much of contemporary ethics in the field of digitalisation has come from and where it is leading.

By way of introduction, we note that in the early period of the 20th Century, Jewish intellectuals were interested in the works of Nietzsche to such an extent that they were regarded by right wing factions in France as Nietzscheans (Schrift, 1995); we also note that, during the First World War, German soldiers were given copies of one of Friedrich Nietzsche’s most famous works to boost their militaristic patriotism. (Aschheim, 1994) What is it about the works of Nietzsche which could have appealed to both Zionists and anti-Semitic nationalists and what could the link be to the contemporary development of AI and SI?

In the book which was distributed to the German soldiers, ‘Also sprach Zarathustra’, Nietzsche writes the famous proclamation that God is dead, which, in the context of his writing, refers centrally to the god of Christianity. (Nietzsche, 1883) In this and other works, e.g. (Nietzsche, 1882), Nietzsche reflects that, in being able/worthy to kill their belief in God, the perpetrators themselves become god, i.e. that Christian godliness is a concept created by its believers and that believers must have the faculty to create their god in the first place in order to be able to kill him later.

In the same works, Nietzsche also coins the terms

- Übermensch (the Super-Human, enhanced beyond human limitations to perfection)

- ‘Untermensch’ (the Inferior-Human, the undesirable antithesis of the Super-Human) and

- ‘letzter Mensch’ (the Last-Human, who lazes in comfort and abundance).

Interestingly, at the 2017 SU-GS in San Francisco, terminology was used like ‘augmented intelligence’, ‘better people’, ‘human enhancement’ and ‘enhanced participants ’ (a term which was applied to superior-level SU-GS participants) – terms which seem to be strikingly close to Friedrich Nietzsche’s concept of the Super-Human.

Given the historical significance of the cultural reception of the ethical premises in the works of Nietzsche in countries such as China, Cuba, France, Germany, Italy, Japan, Russia and the United States of America from the beginning of the 20th Century, we will reflect upon the extent to which not only concepts like the ‘Super-Human’ but also, more importantly, key underlying ethical premises in Nietzsche’s work are to be found in the motives, direction and consequences of a vast segment of current developments in digitalisation, AI and SI, not to omit questions concerning the ethical foot-prints of the drivers of these developments and their legitimacy.

As sociologists, historians and philosophers have been researching more and more deeply into the roots of socio-political movements in Europe, Asia and the Americas during the 20th Century, an increasing amount of information has come to light which indicates that political figures such as Mao Zedong, Che Guevara, Vladimir Lenin, Joseph Stalin and Benito Mussolini were all strongly influenced by central ethical premises in Nietzsche’s work. (Lixin, 1999), (Rosenthal, 1994). Similarly influenced were Charles de Gaulle, Theodore Roosevelt and Adolf Hitler, alongside philosophers, psychologists, sociologists and writers such as Jean-Paul Sartre, Emil Cioran, Osip Mandelstam, Max Weber, Hermann Hesse, Theodor Herzl, Sigmund Freud, C.J. Jung and Carl Rogers. Whilst the cultural and individual reception of Nietzsche’s works varies and whilst certain central premises in the works themselves, which were published between 1872 and 1889, are often portrayed in the secondary literature to vary, the most significant ethical premises which are also particularly relevant for this paper can be formulated as follows:

- the will to influence and determine (reverse-Darwinism and the will to power)

- amor fati and eternal autonomy (living the freedom of embracing one’s life in such a way as to want to live it all over again in exactly the same way)

- self-perfection and superhumanism

- human aesthetics (defiance of nihilism by saying ‘yes’ to – and in experiencing – life as a whole as beautiful, or at least certain presuppositions of such as temporality or necessity as beautiful (May, 2015)).

These four ethical premises are so closely intertwined that we must examine them as a whole rather than as separate items, an approach which in itself is crucial to understanding both Nietzsche’s works (which are perhaps ethically more complex and differentiated than has sometimes been portrayed) and to recognising parallels with current developments in digitalisation, AI and SI.

The will to influence presupposes that the human possesses the faculty to exert will , which means that the human is necessarily autonomous in action, in motives and in ethics. This ethical premise ‘posits’ no god or other transcendental authority who/which might, for example, bestow humans with the free-will to either conform with, or sin against, preordained ethics. In affirmatively assuming a state of being free to one’s own devices, the human-being confronts itself with an apparent choice between two necessities, as follows, ones which we will term ‘self-assigned ethical imperatives ’.

We find this imperative expressed by Emil Cioran, for example, with the sentence:

As an alternative to choose from, we have

This imperative we find expressed by Friedrich Nietzsche with the words:

In contrast to the oeuvres of Cioran, who, in one place writes that he would like to be as free as a stillborn child (Cioran, 2008) (p.1486), Nietzscheanism involves imperatively embracing human creation and passion through, and with, the will to influence, and thereby to be free and alive. (Church, 2012) (p. 135) This latter ethical premise brings with it both the freedom and the imperative to seek ultimate sensations. (Church, 2012) (p. 145) In necessarily affirming one’s natural passions, one defies dogmas such as Christianity which, by virtue of its preaching the suppression of natural passions, is deemed by Nietzscheanism to

- negate natural human life on earth,

- to position true life in an after-life – such as one in heaven or hell – and

- to introduce the debilitating mental phenomena (or ‘mindsets’) of having expectations and ulterior values which steer one’s thoughts, feelings and actions and which lead one to need to justify the latter according to an externally-, i.e. theistically-, pre-ordained set of ethical premises.

In possessing and embracing the will to influence, one defies the passive fatalism of a Darwinist understanding of natural evolution: instead, one takes control over one’s own faculties and development. Amor fati involves the necessary affirmation of whatever has been, whatever is, and whatever will be. Enhancement is a necessity for the human condition and can be perceived to stem from the enhancement of the individual, i.e. one does, and should, strive to perfect the human body and mind both in the present and for the future ; one does so in recognising that the authentic improvements made by one generation can be passed onto the next and that each individual, as an integral part of human continuity and as a ‘synthetic man’ who integrates the past (Church, 2012) (p. 247), contributes to its own longevity and eternal autonomy. The other option would be to let humanity decay and cease to exist.

It is important to be mindful of the historical contexts in which Nietzsche’s writings were being read and absorbed: a broad range of historians and sociologists tell us that, like many other politically engaged figures, Mao Zedong, Vladimir Lenin and influential intellectuals around them deplored the remains of feudalism which they perceived to permeate their respective societies with a ‘peasant’ mentality; they regarded the roots of these remains of feudalism as the antithesis of the energising ethical premises which they found in the works of authors like Nietzsche; the ‘peasant’ mentality represented an immense, if not insurmountable, hindrance to the development of a world-class nation of super-humans; Marxism did not offer a solution which matched the character-traits and aspirations of individuals such as Mao Zedong or Joseph Stalin, nor did it contribute to the attainment of international competitiveness or even supremacy in the way in which ‘Nietzscheanism’ could do. Consequently, the influence of central ethical premises in Nietzschean works on the socio-political developments of China and Russia can be seen to be greater than those of Karl Marx, even though Nietzsche’s works were formally banned in various communist countries from time to time. The relevance of this matter to the current paper is critical for the following reason: whilst Nietzschean thinking and its underlying ethical premises have been associated with some of the causes of ‘humanitarian atrocities’ such as those of the two World Wars, falling at least temporarily into wide disrepute, a very similar cluster of ethical premises, those which concern us here and which do indeed incite the self-legitimisation of individual and collective supremacy, can

and

Key proponents of AI and SI at the SU-GS promised a new salvation for humankind in reversing global warming, enabling the human race to become a multi-world species, running tourist flights to Mars, creating a world of abundance, eradicating both all human medical illnesses and authoritarianism etc.

the audience was given to understand in various synonymous formulations throughout the event

Listening between the lines, one of the common core messages of most key speakers could be interpreted as being explicitly energising-entreating and implicitly excluding-threatening, i.e.

Writing in the Financial Times, Robin Wigglesworth cites something very similar in relation to the use of AI which is purported to be revolutionising the management of money, e.g. in the form of natural language processing (NLP):

It is significant for our discussion that Mao Zedong, Vladimir Lenin, Adolf Hitler, Charles de Gaulle, Theodor Roosevelt and many others who were inspired by works which notably included those of Nietzsche rose to positions of large-scale influence and became driving forces behind the socio-political and economic developments of the 20th Century. Very often couched within such developments lay the phenomenon of inclusion and exclusion in a form which was quite possibly accentuated through the circular and Nietzschean twist of self-determined legitimacy. Either one empowered oneself to adopt and embrace the posited ethical credo, or not:

In other words, at that time, any consequences such as dis-enfranchisement and exclusion were self-inflicted. Today, national economies have the option to include themselves through digital compliance and digital assertion or to exclude themselves and bear the consequences on their own shoulders. In turn, political parties have the option to include themselves in the forces exerted by the economy and consumers or to bear the self-inflicted consequence of being ousted or not even voted for.

Interestingly, we find that not only the word ‘anxiety’ (see above) but also the word ‘inclusion’ – according to Google’s N-Gram – has been progressively increasing in prevalence in recent years, suggesting that it, too, is a significant issue in contemporary society. (Google, 2017b)

Also, we note that the vocabulary currently being used in the fields of the development of digitalisation, AI and SI which we have been analysing seems, in general, not to include terms such as ‘grace’ or ‘pity’, let alone ‘shame’ or ‘guilt’. Nietzsche himself explicitly deplored the concept of ‘pity’, i.e. pity for the Lower-Level Human, just as he held the concept of egalitarian democracy in low regard. (Nietzsche, 1888) As Vladimir Jelkic remarks:

Higher individual and collective status naturally co-occurs with individual and collective inferiority – without which the concepts of the Super-Human, superintelligence and supremacy have no significance. Accordingly, those who do not, or cannot, embrace the development of technology-based enhancement and Super-Humanism , including those who stand in its way are, by implicit definition, collectively Inferior-Humans. It follows that to have pity on such humans would logically and ideologically not fit with the set of four ethical principles outlined above. According to Nietzsche’s credo, the concepts of ‘love for humanity’ and ‘pity’ belong to the undesirable ethical principles which Christianity had been propagating for many centuries and which had helped the weak to maintain their grip on power (Froese, 2006) (p. 118); further, those who do not, or cannot, embrace human aesthetics as the justification to existence and life have factually opted for the first of the two possible self-assigned ethical imperatives given above, which is nihilism wherein human life has no value. Given the fact that any consequences would be self-determined, it would be unfitting for others to feel or show pity for such people. It follows that there would be no sense of bad conscience or guilt towards those who find themselves in a condition of self-inflicted dis-enfranchisement – which helps us to understand why, as mentioned in the Introduction above, Jay Ogilvy writes:

The black-and-white dichotomy between superior and inferior to be found in Nietzsche’s legacy – i.e. his ethical footprint – manifests itself today both overtly and covertly in the eastern, western, northern, southern, digital and non-digital hemispheres. In this vein, the futurist Robert Tercek comments in an article on digital convergence:

In the work of digitalisation specialists such as Professor Venkatraman (Venkatraman, 2017b), we see that the monetarisation of big data has become the secret to exponential growth, commercial success and supremacy, as the new meaning of the abbreviation ‘URL’ clearly indicates:

Those commercially intelligent companies which aspire to market superiority are currently hunting, according to Venkatraman, for any and all opportunities to capture data and turn it into monetary value. One example is how search engines can gather data about consumer decisions at what is termed the ‘zero moment of truth’, i.e. when consumers are researching for products. The search engines process the data which they glean from online search-behaviour so that product information can be adapted and placed in such a way as to help and influence consumers in huge masses; the tools of the search engines can even identify commercially viable products and services which do not yet exist. Not only are the daily online search and credit-card behaviour being minutely monitored, statisticised, valorised and monetarised but also attitudinal information and habits from a rapidly increasing number of walks of life, including the personal health, the mobility, the political leanings and beliefs of billions of people.

Whilst the commercial objective may be to monetarise the data and not to make people paranoid about being followed and monitored, what can possibly be seen to be happening from a critical, societal perspective is a return to the scale of psychological dis-enfranchisement which emerged at the beginning of the 20th Century. That was when millions of men and their families were arguably psychologically and physically conscripted into a status with a strong likelihood of yielding amor vitae meae (love of my life) for a statistical entry into a list of named and unnamed gravestones. This time, at the beginning of the 21st Century, one could argue that psychological dis-enfranchisement is not ethicised, as it was then, under the imperative of a nationalistic vision of amor/salus patriae (the love/salvation of the country), but under the imperative of a global vision called salus humani generis (the salvation of humanity). Many of the proponents of AI and SI are making it abundantly clear that the achievement of this global vision will be impossible without the ingredients of superintelligence which lesser humans lack, or lack access to.

In the case of Microsoft, the publicly available formulation of the corporate credo is:

At Facebook, one finds the Mark Zuckerberg’s vision formulated as follows:

A significant inherent element which these two statements share is that of power-ownership: the latter is, of course, a prerequisite for the empowerment of those who possess the faculty to be empowered. Zuckerberg’s formulation ‘that works for all of us’ may or may not contain a Freudian slip in its ambiguity: Who is meant by us? Is it every human-being or every dominant player in social media? Who is the person doing the work? – And for whom?

Whilst it is arguable that figures such as Mark Zuckerberg cannot be made ethically accountable for what has factually developed out of their creations, it can be conjectured that social media like Facebook, LinkedIn, Twitter, WhatsApp etc. operate today with a hidden agenda, i.e. with a conscription-like strategy which one might formulate along the following lines:

Whilst establishing the veritable ethics behind the visions, missions and strategies of these companies would require interviews and reflections (Robinson, 2016) with the key managers, certain hints can be gained from examples such as the following report in the Guardian:

Commenting on Zuckerberg’s response to the question why Facebook took over 24 hours to remove the video from public access and why such material is not immediately removed but allowed to stay online due to stringent internal policies, Michael Schilliger writes in the Swiss newspaper, NZZ:

Schilliger comes to the conclusion that it is Facebook’s business model, namely ensuring maximum attractiveness to its users, which dictates how policies and decisions are made: in other words, the commercial ethics of ‘maximising advertising revenues and monetising as much gathered data as possible’ overrides the societal ethics of ‘respecting personal dignity’ and ‘sympathising with the individual in times of personal tragedy’. As discussed in relation to the phenomenon of cultural differences in ‘The Question of Intent in Joint Ventures and Acquisitions’, (Robinson, 1993) differences of ethical standpoint can lead to irreversible interpretations of intent, often undesired ones. In the case at-hand, those who place their focus on societal ethics can interpret Facebook’s commercial ethics and intent to be that of self-empowered commercial self-interest and indifference towards the powerless, insignificant individual.

In effect, current developments in the application of digitalisation seem to constitute a continuation of the process of uninventing the individual to which Nietzsche’s works contributed at the end of the 19th Century. When, as mentioned above, Nietzsche proclaimed that (Christianity’s) humans had killed the god whom they themselves had created and that they had become their own god in deeming themselves worthy of doing so, arguably, he tried to circumvent the logical consequence of his thesis, namely that they had, at the same time, effectively ‘killed’ themselves in wiping out the foundation for their own individual ethical significance: his attempt at circumvention manifests itself in his vision of the reverse-Darwinist creation of the Super-Human. One is tempted to wonder if this would be a new generation of ‘jesus christs’ or of the ‘self-chosen few’. The vision of this Super-Human which – Nietzsche leaves us to interpret – would be the embodiment of the being which creates the set of fundamentally new values, which he posits as a necessity for the attainment of genuine eternal autonomy and freedom, effectively throws a black shadow of inferiority over the contemporary human species: the latter is the Last-Human, i.e. a creature of insurmountable ethical inadequacy and thereby of moral insignificance. Of necessity, Nietzsche’s Super-Human must repudiate his/her/its parents/creators. The parent-child bond, and with it the family, are torn asunder. This atomisation of the human relationship into independent constituents constitutes a mental act and an ethical premise which – not surprisingly given Nietzsche’s rejection of Christianity – stand in contradiction to one of the ten commandments of the Hebrew Bible and which, in the era of digitalisation, have far-reaching consequences for self-understanding , for individual mental health, for social unity, for corporate culture and for further developments in AI and SI.

In parallel to the implications of the vision of the Super-Human, the ethical premises and circularity behind Nietzsche’s vision of amor fati which, etymologically at least, can be interpreted to mean not only

but also

could erase any remains of moral significance which future society might otherwise have inherited from the pre-Super-Human condition. In positively affirming amor fati for the human condition , Nietzsche was arguably implicitly and positively affirming the human condition’s futility. Nietzsche would appear then to have replaced the dualism of the ‘is-entity ’ and the ‘should-entity ’ with another, even more fundamental one, i.e. self-created meaningfulness and self-created meaninglessness.

The dismantling of the ethics of Christianity, a religion which Nietzsche viewed in ‘Der Antichrist’ (Nietzsche, 1888) as being based on a nihilistic worldview , and the dismantling of the ethics of other forms of theism , brings with it a deconstruction of the ethics of the credo upon which modernism and liberal democracy were built, i.e. the ‘moral individual’ (as defined below).

The notion that the Super-Human would ‘transvaluate’ or re-evaluate values, i.e. build ethics from zero – that is, if the Super-Human were to find the word and concept of ‘ethics’ at all appropriate – seems, when we contemplate what could emerge from AI, SI and singularity, very similar to our contemporary predicament.

It would not be surprising, therefore, to find that, wherever our Nietzschean-like cluster of four ethical premises underlies the creation and the use of digital instruments such as the internet, social media, soft robots and other AI-artefacts, the central pillar of liberal democracy – i.e. the moral individual – is also progressively eroding.

At this point, it is crucial that we differentiate between the concept of ‘individuality’ and that of the ‘moral individual’, a distinction which is expounded by Larry Siedentop in his book entitled ‘Inventing the Individual’. (Siedentop, 2014) Here, Siedentop defines ‘individuality’ as an aesthetic notion, the origins of which are to be found in the Renaissance. The ‘cult of individuality’, as he formulates it, is a cultural phenomenon which gradually evolved through the later movements of humanism and secularism into its fully-fledged, modernist state. In contrast to its counterpart, the moral individual (which is defined below), the phenomenon of individuality seems today not to be eroding, but to be finding increasing resonance in the space created in atomistic, utilitarian, secular and egalitarian segments of global society, where rationality, reason and empiricism override ‘irrational’ belief and ‘unreasoned’ moral convention, thereby de-legitimising – or having little place for – the form of regret, shame, bad conscience or guilt which pervades the whole person’s BEING.

It is in this new space that individuality can thrive in the acts of both creation and consumption of digital artefacts. Through the atomisation of BEING , individuality has now become the promotion, legitimisation and act of active self-expression and individual happiness, whereby:

In his treatise on ‘The Divided Individual in the Political Thought of G.W.F. Hegel and Friedrich Nietzsche’, which is the subtitle of ‘Infinite Autonomy’, Jeffrey Church writes that Hegel argued individuality to be an ultimate consequence of the atomisation and alienation of individuals:

In order to make as clear and as relevant a distinction as possible between ‘individuality’ and the ‘moral individual’, we propose – following Siedentop – that the latter involves a non-atomistic ethical premise whereby the individual’s personal, internalised ethics or ‘ethical innerness’ plays a determining role in his/her physical behaviour, attitudes and thoughts in alignment with collective, societal ethical innerness. Thus, this understanding of the moral individual involves the holistic form of the concept of ‘integrity’, a form which captures a form of authenticity comprising both inner wholeness and moral uprightness in society. The moral individual has a moral conscience which is ‘one’ with that person’s identity. Leaders in the Western world who stray too far from the moral individual and lose their ethical authenticity do so irreversibly at their own peril, as we have seen with numerous political figures such as Bill Clinton and Tony Blair.

In Dante’s ‘Divine Comedy’, which we referred to in ‘If You Have A Vision’ (Robinson, 2016a), one finds scores of examples of the moral individual, each portrayed with particular succinctness. Dante treats the physical body, the moral character and the professional occupation of his chosen figures in the ‘Divine Comedy’ as being unavoidably – in the Nietzschean sense of self-determined fate – ethically contingent upon, and congruent with, each other. The manner in which this one-ness, which is fundamental to the invention and concept of the moral individual, is described and commented by Erich Auerbach in his book ‘Dante, Poet of the Secular World’ (Auerbach, 2007) and serves as an illustration of the converse of atomism, i.e. through holism and ‘non-duality’. The latter, originally an ancient Hindu and Buddhist notion, is expounded by David Loy in his book ‘Non Duality’, (Loy, 1997) and signifies a non-atomised state of BEING from which ultimate integrity, one-ness and un-contradictoriness emerge.

It is particularly relevant at this point to note that Dante conceived (i.e. gave birth to) his moral individuals and wove them into his poetic story-line whilst he himself was subject to a strongly theistic and strongly supremacy- and commercially-driven, often simoniac social environment. In the vocabulary of our discussion, the figureheads of this social environment had ordained that he should be banished from life in Florence since he had chosen not to affiliate himself with the meritocratic Black Guelfs who supported the papacy; as a result of his deeds and ethical leanings, he found himself in a self-inflicted, permanent exile and a situation of the self-dis-enfranchisement of what he felt to be his natural identity.

The ‘Divine Comedy’ reveals how Dante had started to reflect, as Nietzsche did six centuries later, on his perceptions of a lack of integrity in the practice of Christianity. This Christian environment was ruled on Earth at that time from the Vatican by the Bishop of Rome whom believers expected to be a moral role model. The incumbent Bishop of Rome, Pope Boniface VIII, was Benedeto Caetani, a man whose legitimacy to hold such an office Dante fundamentally questioned on ethical grounds. In the Divine Comedy, Dante reveals that he perceives there to be a faith-shattering, disqualifying incongruence and contradictoriness between the individual, Benedeto Caetano, and the holy office of the Bishop of Rome, not least because of the suspicion that this new pope had orchestrated a self-legitimised and self-legitimising power-grab from his predecessor, Pope Celestine V, Pietro Angelerio. As the Divine Comedy unfolds, one realises that Dante applies his poetic licence to paint each figure, including Pope Boniface VIII, as an individual whose self-inflicted, self-legitimised ethics are inextricably bound to their self-inflicted fate, for eternity. Dante, the dis-enfranchised exile, legitimises himself to be god and to ‘kill’, i.e. to dis-enfranchise at the core of identity, the figure whom he feels to be an ethically impostrous pope by allocating him a permanent place in Hell while he (the pope) was still alive. In affirmatively assuming the role of the theistic authority which he has negated, Dante arguably erases the last remains of his own moral significance. He does so not only with unique, aesthetic , poetic skill, but also with an ‘as-if’ form of poetic licence which evolves into a truly visionary comedy of human ethics. Positioned on the knife-edge which unites and divides the positive affirmation of both existence and nihilism, this comedy has inspired millions of people for almost seven hundred years. Deeply embedded within his comedy we find meaning and meaninglessness juxtaposed. Dante plays with the centrality and the triteness of ethical premises, those vital yet tenuous ‘as-if’ phenomena posited by human BEING and capturing its essence – i.e. the as-if phenomena which can integrate into the ethical systems underlying individual and collective identity or disintegrate such identity, as we will explore below. The central role which as-if phenomena play in the creation and realisation of corporate and other visions is examined in depth in the article mentioned above (Robinson, 2016a). What concerns us here is their role and attributes at the core of human ethics, as illuminated in the works of Dante and Nietzsche, and why a deep understanding of these phenomena could be sagacious for those contributing to and/or influenced by developments in AI and SI. We will return to these points in Section 2.

The parallels between the ‘Divine Comedy’ and the works of Nietzsche (who made several references to Dante during his literary career) are striking at the level of the ethical premises which we have been discussing, i.e.

- will to influence, including the inherent circularity in relation to legitimacy,

- amor fati, (Rubin, 2004) (p. 127-130)

- human aestheticism,

- the inherent nihilism which underlies a positive, visionary, ‘as-if’ affirmation of life, (Robinson, 2016a)

As a short illustration in recapitulation of what we have discussed so far, Robert Durling offers the following translation of Dante’s original lines in the ‘Paradiso’ section of the ‘Divina Comedia’:

Condensed into just three aesthetic lines of poetic ‘as-if’ licence, Dante commences with the ethical premise of the positive affirmation of human existence which finds expression in the self-legitimised statement of personal will by the speaker of whom Dante is enamoured, Beatrice. This ethical premise of the will to influence is then emphatically contextualised by not one, but two explicit references to the paradigms of certainty-uncertainty and belief-doubt : clearly – as with Kant’s ‘categorical imperative’ (Kant, 1871) – self-assigned faith, i.e. the human affirmative imperative , is contingent upon its counterpart, nihilism. The human condition is thus atomised, the moral individual un-invented, Dante’s belief in integrity has been shattered into the shards of as-if’s upon which human BEING has landed with its tender feet. As also discussed above in relation to Nietzsche’s works, the phenomenon of human ethicality with its inherent circularity becomes clear in the message that fate comes to those who have the faculty for that particular fate, and hence for amor fati: merit comes only to those who are meritorious, and those who are not meritorious unavoidably find their own self-inflicted fate – at no fault of the meritorious. Not only does Dante portray the receiving and the attaining of grace as being reciprocally contingent , he pre-empts Nietzsche also with the aspiration of humanity towards the attainment of perfection and ethical meritocracy.

Returning to Siedentop’s book, ‘Inventing the Individual’ (Siedentop, 2014), we note that, whilst he does not make any reference to the ‘Divine Comedy’ or to Dante, he does underline the significance of Pope Boniface VIII in relation to the evolution of the moral individual. Siedentop plots the creation of the moral individual in steps which include the following:

In his treatise of the invention of the moral individual , Siedentop’s lack of reference to the ethical legacy of either Dante or Nietzsche and – whether the lack of reference was deliberate or not – allows him to evade the thesis that the uninventing of the moral individual was induced by

- Christianity’s negational suppression of natural passion and aestheticism,

- its promise of conditional salvation from human sin in an after-life,

- its atomisation of the family-unit and

- its escape from humanity’s ethical inadequacy through the non-human conception of a superhuman.

Whilst literature on the fate of the moral individual appears to be scarce, there is a wealth of publications on the erosion of values in contemporary society, some of which – rationalists, in particular, might argue – need to be treated with caution or skepsis. What most of these publications share, regardless of whether the contents are based on empirical evidence or not, is the fact that their authors base their observations and theses on the premise that societal ethics should be preserved, i.e. on the premise that societal ‘shoulds’ and ‘should-nots’ have a legitimacy and are a necessity in guiding, condoning, tolerating, rewarding, denouncing, censuring and punishing people’s mental and behavioural acts.

From the perspective of anethicality which, as remarked earlier, lies outside the confines of solely human ethics, there are several phenomena at play in contemporary society which could explain the perception that societal values are indeed eroding in many communities. These include the likelihood that:

- the principles of the market economy and digital development now pervade such vast segments of life and society that there has been a marked proportional shift from societal ethics to business and engineering ethics

and

- national and party politics as well as voting at local and national elections are being influenced in such a fashion by what is now termed ‘digital citizenship ’ that the nation-state is being un-invented, cf. ‘Digital Citizenship and Political Engagement’ by Ariadne Vromen (Vromen, 2017)

Evidence of phenomena such as the first one can be found in research studies, e.g. at the University of Bonn which concludes:

Michael Keating, professor of politics at Aberdeen University, sees a trend which is very closely linked:

Given Larry Siedentop’s observation that the emergence of the moral individual correlates with the emergence of the nation-state, it is comprehensible that the un-invention of the one will go hand-in-hand with that of the other.

Looking at the phenomenon of the extensive pervasion of digitalisation and atomisation, could it be that we are indeed approaching ethical singularity, Nietzschean trans-valuation and entering into uncharted, supra-ethical or ethic-less territory?

Could it be that the more digitally-conditioned generations and segments of society are – perhaps inadvertently – indicating to their less digitally-conditioned counterparts , and society at-large, that the advent of AI and SI is challenging much more than merely the way in which values are assigned different priorities ?

Could it be that the legitimacy of, and the necessity for, ethical ‘shoulds’ and ‘should-nots’ are being challenged?

Or, referring back to our earlier discussion, could it be that the appropriateness of any form of imperative which emerges together with the mental act of a positive affirmation of human existence is being challenged?

If Keating is right that the integrity of the nation state has disintegrated, (Keating, 2017) then so also the ethical integrity of the individual.

1.4 Ethical integrity: Why have we invented such an impediment to ethical transformation?

When a person A says that a person B lacks ethical integrity, A often means that B has been behaving in an unethical manner. A deems B’s thoughts and behaviour to stem from a set of values and ethical premises which are different to A’s and thereby bad. Sometimes, A means that B has been erring from accepted ethical norms. As these examples show, the term ethical integrity can, on the one hand, be used to express ethical divergence and exclusion. If, on the other hand, A were to praise B on the grounds of his/her ethical integrity, then A would be expressing feelings of ethical congruence and inclusion.

In general terms, members of an ethical community are expected to behave in an ethically acceptable and predictable fashion; community members expect themselves and others to conform and tend to express their dislike – or even abhorrence – of non-conformists in an ostracising manner.

Being based on the premise of mono-ethicality, the concept of ethical integrity serves to strengthen intrinsic rigidity within systems of ethical premises both in the individual and in the community. The use of the term ‘ethical integrity’ implies, of course, that ethical laxity and divergence do indeed exist and also that they should be avoided and admonished in the interests of the survival of the community: in other words, the diversity, plurality and malleability of human nature should not be left undisciplined. Applying the terminology of our previous discussion, we can recognise that, for many centuries, disciplined adherence to a specific set of as-if ethical premises and conventions, i.e. ethical integrity, serves to provide individuals and communities with existential certainty. It follows that ethical integrity inherently expresses the anticipation of – and, quite often, the fear of – existential uncertainty.

Thus, it is reasonable to argue that ethical integrity has been invented as a key criterion for social cohesion, identity and BEING by making human thoughts and behaviour either reciprocally predictable and trustworthy or fear-generating and untrustworthy: whilst ethical malleability and transformation run the danger of generating fear, untrustworthiness and existential uncertainty (particularly in mono-theistically conditioned cultures, (Robinson, 2014)) mono-ethical integrity is a sine qua non for an affirmative, certainty-creating and -preserving human condition (both for individuals and communities) including affirmative nihilism. (Cioran, 2008)

1.5 Uninventing ethics: Is the development of AI and SI challenging ethical integrity?

What we are possibly now heading towards is an age, already anticipated by the digitally-conditioned generations, in which the mind-set of creating existential certainty – through the assumption of ethical premises as the concretisation of a meaning to life – finds itself compelled to co-exist and interact with the artefacts of AI which apparently have no such mindset, i.e. do not (need to) search for a meaning to their existence, have no emotion-binding ethics, have no need for certainty, but do have the capacity to process very high volumes of data with no suppression of information through psychological factors or through volume limitations due to restricted retrieval capacity.

Could it be that a fundamental shift in global ethics is already underway or, returning to the earlier discussion, could it be, in the immediate term at least, that a significant proportion of these sensor- and algorithm-packed, probability-calculating AI-artefacts will simply remain instruments of the ethics of human economic, political or racial supremacy?

Some of the fears which are emerging in relation to the latter scenario in particular have been captured by Jon Kofas and include the following:

Such fears could have their roots in an ethical standpoint which differs from the original cluster of ethical premises which we have been discussing. The fears implicitly warn us that, unless something changes at the level of core ethical premises, increasingly large segments of humanity could be following the path of the Nietzschean ethical footprints which ultimately end in

and

until

Currently, in accordance with the adage of ‘seeing is believing’, internet and media consumers process so much visual information about other people and cultures that they realise increasingly that their personal worldview is just one of a potentially infinite number. Through watching the world news and documentary films they see that humanity is so multi-religious, multi-secular, multi-atheist, multi-democratic etc. that no single worldview can be veritably valid – let alone universally valid – and certainly not their own.

They also see that a high proportion of individuals and groups take ethics into their own hands with negligible levels of unified global recognition or sanctions; if there are any consequences at all, these can differ so widely from one culture, nation-state and geopolitical union to another that, even though the legal systems of most nation-states were designed to restrict it, individuality-based ethics and behaviour must be legitimate.

They see beheadings, hangings, suicides, thefts, acts of rape and terrorism broadcast publicly in the media, alongside the live-filming of people being swept away to their deaths by natural disasters, alongside trillions of snapshots of people sunning themselves, enjoying their individuality and following their passions. They see uncountable documentary films about different worldviews or creatures – living, procreating, dying or already extinct – alongside one film after another re-casting previous versions of history, science and truth. They also see air-borne drones and earth-bound robots doing tasks – including outwitting the world’s best chess-players – with exponentially increasing levels of ‘dexterity’, precision, tirelessness, effectiveness, efficiency, calculatory and data-retrieval capacities which threaten to dwarf human capability into what may be felt to be humiliating insignificance in numerous sectors of professional and private life.

Viewed from the perspective of the ethical premise of self-determination, the effects on consumers of prolonged interaction with these media and artefacts could include the stripping of their dignity and the banalisation of their ethical integrity and personal identity. For people who have been ethically conditioned to cherish the premise of personal dignity, something which even those in the more advanced stages of dementia try to preserve for as long as possible, this erosive process may have serious psychological consequences. It is no coincidence that organisations which support people who wish to retain their dignity by exercising self-determination in relation to the termination of their own lives have chosen names such as such as ‘Dignitas’ (which is based in Forch, Switzerland) and ‘Death with Dignity’ (in Portland, USA).

For many people, suicide or euthanasia are their final earthly acts of ethical integrity, their will to practice affirmative, self-legitimised certainty, to achieve eternal autonomy and amor fati. The powerless which they feel can be physical, psychological and often both. Many suicide notes express a need to autonomously put an end to the inner torment of lost dignity, powerlessness or heteronomy and thereby to assert their ethical integrity.